Web maps in 2025

As the developer of the Trackserver WordPress plugin, I have a special interest in web mapping solutions, and in particular those that are not Google Maps 🙂 The landscape has changed quite a bit over the years, and more and more tools have seen the light of day. In this blog post, I want to explore the current landscape and see what it means for Trackserver.

To publish an interactive map on a web page, you need at least these two components:

- A source (server) for map tiles;

- A client side Javascript library for rendering the map.

For a long time, there were not many options for either of these. There was only one type of map tiles, called ‘raster tiles’, and you would get them from OpenStreetMap directly or from one of the providers listed on their website. Over time, commercial tile providers like Mapbox, MapTiler and Stadia Maps entered the scene, providing tiles as part of a larger mapping ecosystem. They generally provide access to tiles for free, but with usage limits. That makes sense, because hosting isn’t free.

Client side, there were two options that could be considered mainstream: LeafletJS and Openlayers. Both are still in active development today, or so it seems. Leaflet’s last public release is nearly two years old.1

In 2013, Mapbox came up with a new mapping technology called ‘vector tiles‘. Where raster tiles are just pre-rendered square pictures, containing a visual of a small piece of the map, a vector tile is essentially a machine readable description of geographic data, encoded in binary form. To render it in visual form, you need to provide a style sheet that describes how to style and format different map elements. The rendering is then done on the client side. This became feasible with browser canvas improvements and the arrival of WebGL. Mapbox Vector Tiles (MVT) are the dominant format for this type of tiles.

Vector tiles have some advantages over raster tiles:

- They’re generally smaller, so more efficient to transport;

- Beter visual quality, because they can be rendered at any zoom level or screen resolution without pixelation;

- Flexible, client-side styling – easy changing of how certain elements are drawn;

- Data-driven (dynamic) styling;

- Easier interaction with specific map elements, because they are in fact separate elements;

- Not directly a feature of vector tiles, but because WebGL is generally used to render them, features like 3D maps, hill shading and map rotation become easier to implement.

While Openlayers has had support for vector tiles since 2014, LeafletJS still does not support vector tiles natively. This makes me wonder if Leaflet is slowly starting to lose its relevance. With the adoption of vector tiles, more client side libraries have been developed. Perhaps not surprisingly, it is Mapbox’s own library (Mapbox GL JS) that leads the field of vector tile renderers. For a long time, the client was free and open source software, released under the 3-Clause BSD license, but with the release of v2.0 in December 2020, they moved to a proprietary license. Also, the SDK is taylored to be used with the Mapbox platform, and maybe less suitable for use with other tile sources.

The Mapbox license change led to the creation of a derived version (a fork), called MapLibre GL JS. It is now developed independently, and still released under the 3-Clause BSD license. As of 2025, Openlayers and MapLibre GL JS seem to be the top contenders for rendering vector-based maps. It is also worth mentioning MapTiler and their JS SDK. This is based on MapLibre GL JS, but has extra functionality to work with MapTiler’s platform. While this is not unlike Mapbox GL JS, MapTiler SDK JS is fully open source (BSD license) and it is specifically designed to stay compatible with other tile sources.

So, what does this all mean for Trackserver, if I wanted it to support vector tiles?

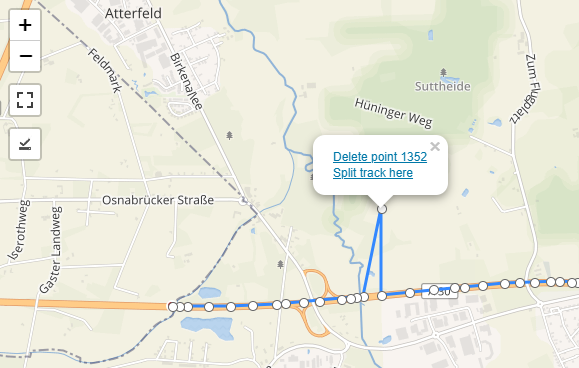

First of all, Trackserver is built on LeafletJS and it uses a few plugins for specific functionality, including editing tracks (GeoJSON geometries) in the WordPress backend. Rewriting all of this functionality on top of Openlayers or Maplibre GL JS would not be feasible. I can hardly keep up with PHP versions and browser changes as it stands, and I would much rather spend my time on building new features than on doing a complete rewrite.

Fortunately, there are possible workarounds. Maplibre GL JS can be made to work with Leaflet through a plugin, and there is Tangram, a library for rendering vector data with WebGL, that also works as a Leaflet plugin. If they work well, and which of these is the most stable, compatible and feature-rich remains to be researched and tested.

Update 3 June 2025: There is also a project called ‘protomaps-leaflet‘, that implements a vector tile renderer as a Leaflet plugin. It was built mainly to render PMTiles with Leaflet, but it can also use traditional style (Z/X/Y) URLs. It’s more like a drop-in replacement for the native raster tile renderer, and it does not support stylesheets like Mapbox and MapLibre GL JS do. It also doesn’t use WebGL, but uses the canvas API to render the tiles.

Some further reading:

Footnotes

- With LeafletJS v1.9, released in 2022 and last updated in May 2023, the v1 major version was put into maintenance mode, and some plans for v2 were announced.

However, as far as I know, no development on version 2 has been done in the public Github repository and no release date for Leaflet v2 has been planned.Update of 28 May 2025: on 18 May 2025, Leaflet v2.0.0-alpha was released on Github. This marks the first step in the modernization of Leaflet. It is yet unknown whether native support for vector tiles is on the roadmap. ↩︎